GPU-on-RAN: Does It Make Economic Sense?

Turning Towers into Thinkers: Why Energy-to-Intelligence Works in Physics but still fails in Telco Economics

The Question

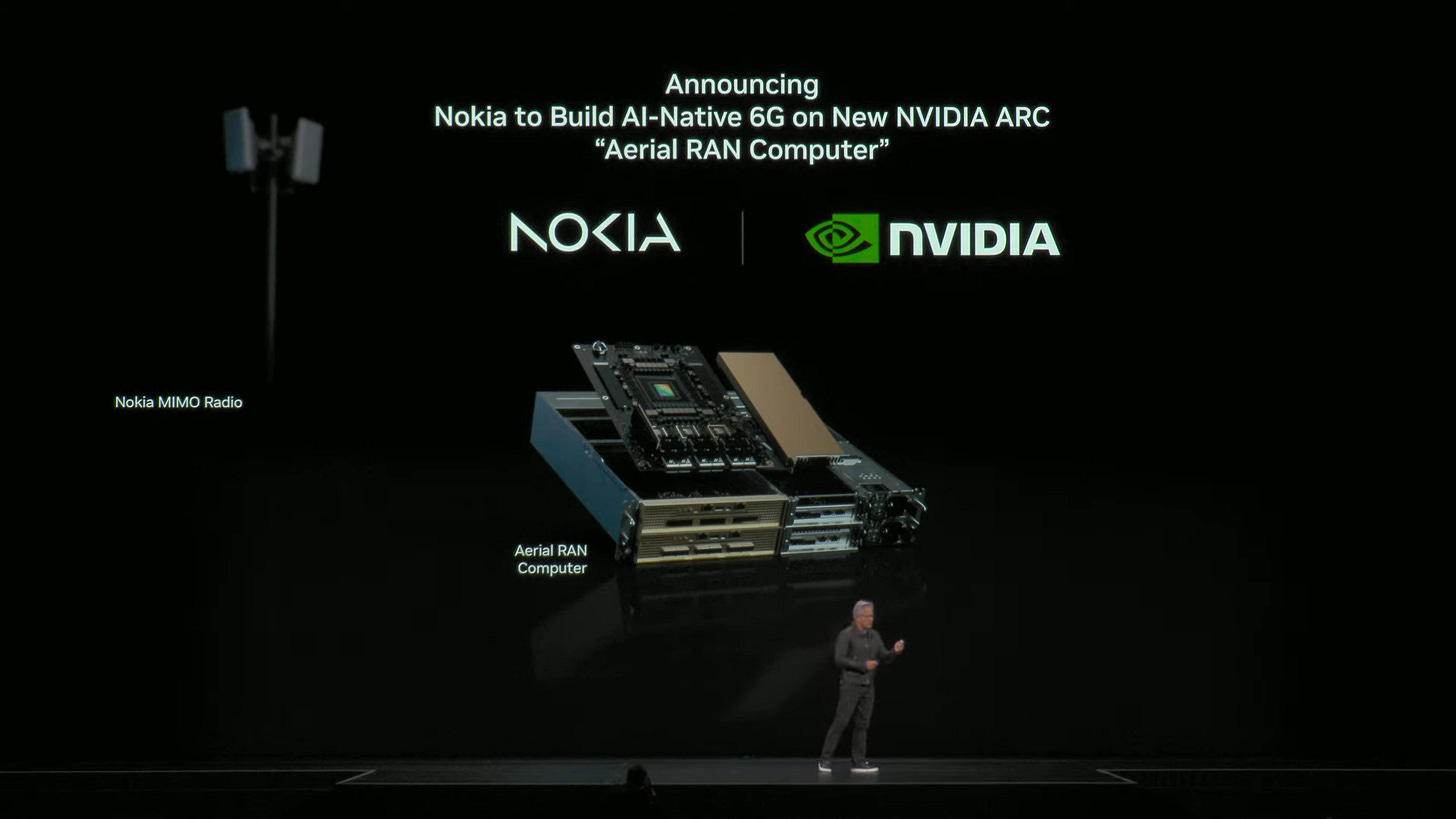

Jensen Huang says the new economy runs on energy converted into intelligence. That statement is correct in physics and wrong in economics until you measure it. Telcos already convert more than seventy terawatt-hours of electricity each year into wireless capacity. The following proposal is to let part of that power feed GPUs in the RAN baseband, producing not just connectivity but also inference. The idea sounds efficient: every tower becomes an AI cell. The real test is whether it pays back.

The measurement unit is simple:

Tokens per joule and dollars per token.

If a radio site draws three hundred watts continuously, how many reasoning tokens can it generate once its radio duties are done? How does that efficiency compare to a hyperscale data center running the same silicon at seventy percent utilization with controlled cooling and constant demand?

The objective of this analysis is to run that math without a narrative. It treats the baseband as an energy converter and asks how much usable intelligence it can produce per watt and how much that output is worth in today’s market. If GPUs on RAN cannot match the economics of centralized inference, they will remain an engineering experiment rather than an industrial model.

The Machine

A GPU-on-RAN baseband is a radical shift from traditional telecom hardware.

Keep reading with a 7-day free trial

Subscribe to Sebastian Barros Newsletter to keep reading this post and get 7 days of free access to the full post archives.